Installation And Configuration

Software Requirements

Prebuilt Replay packages are distributed as Docker images, so regardless of the platform and OS distributive, you need to have Docker installed on your system.

If you do not want to run Replay in Docker, you can build it from source or contact us for building binaries for your platform.

Docker Images for ARM64 and X86_64 are available on GitHub Container Registry:

replay-x86 - for X86_64 CPUs;

replay-arm64 - for ARM64 CPUs.

Quick Installation

To run Replay in Docker, you can use the following command:

X86_64:

docker run -it --rm \

--network host \

-v $(pwd)/data:/opt/rocksdb \

ghcr.io/insight-platform/replay-x86:main

ARM64:

docker run -it --rm \

--network host \

-v $(pwd)/data:/opt/rocksdb \

ghcr.io/insight-platform/replay-arm64:main

The service will use the default configuration file and store data in the data directory on the host machine.

Instead of the main tag you can use the specific version of the image, e.g. v0.1.1. Refer to the GitHub Container Registry for the list of available tags.

Environment Variables

Only one mandatory environment variable is required to configure Replay in Docker:

RUST_LOG- sets the log level for the service. The default value isinfo. You can usedebugortracefor more detailed logs. Also, you can change log level for a particular scope.

You can also use optional environment variables in the configuration file. Refer to the Configuration Parameters section for more details.

Ports

Replay uses the following ports:

8080- the port for the REST API;5555- the port for ingress data (Savant ZeroMQ protocol).

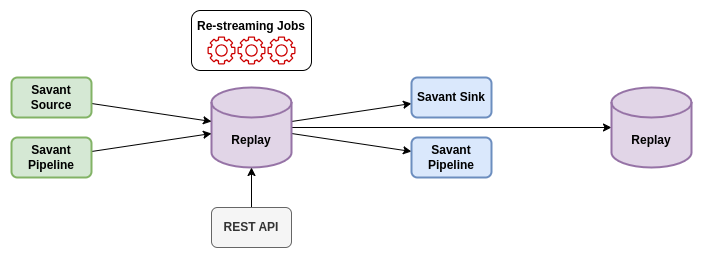

If the service is deployed as a dead-end consumer, you do not need to use any other ports. Nevertheless, depending on the configuration file, you may need have an extra port opened for egress, when the service is deployed as intermediate node as depicted in the next image:

Configuration File

The configuration file is a JSON file that contains the following parameters:

{

"common": {

"pass_metadata_only": false,

"management_port": 8080,

"stats_period": {

"secs": 60,

"nanos": 0

},

"job_writer_cache_max_capacity": 1000,

"job_writer_cache_ttl": {

"secs": 60,

"nanos": 0

},

"job_eviction_ttl": {

"secs": 60,

"nanos": 0

}

},

"in_stream": {

"url": "router+bind:tcp://0.0.0.0:5555",

"receive_timeout": {

"secs": 1,

"nanos": 0

},

"receive_hwm": 1000,

"topic_prefix_spec": {

"none": null

},

"source_cache_size": 1000,

"inflight_ops": 100

},

"out_stream": {

"url": "pub+bind:tcp://0.0.0.0:5556",

"send_timeout": {

"secs": 1,

"nanos": 0

},

"send_retries": 3,

"receive_timeout": {

"secs": 1,

"nanos": 0

},

"receive_retries": 3,

"send_hwm": 1000,

"receive_hwm": 100,

"inflight_ops": 100

},

"storage": {

"rocksdb": {

"path": "${DB_PATH:-/tmp/rocksdb}",

"data_expiration_ttl": {

"secs": 60,

"nanos": 0

}

}

}

}

The above-mentioned configuration file is used by default, when you launch Replay without specifying the configuration file. You can override the default configuration by providing your own configuration file and specifying it in the launch command:

docker run -it --rm \

--network host \

-v $(pwd)/data:/opt/rocksdb \

-v $(pwd)/config.json:/opt/config.json \

ghcr.io/insight-platform/replay-x86:main /opt/config.json

Configuration Parameters

Parameter |

Description |

Default |

Example |

|---|---|---|---|

|

If set to |

|

|

|

The port for the REST API. |

|

|

|

The period for displaying statistics in logs. |

|

|

|

The maximum number of cached writer sockets for dynamic jobs. When you create many jobs this feature allows reusing sockets. |

|

|

|

The time-to-live for cached writer sockets for dynamic jobs. |

|

|

|

The time period completed jobs remain available in API for status requests. |

|

|

|

The URL for the data ingress in Savant ZMQ format. |

|

|

|

The timeout for receiving data from the ingress stream. Default value is OK for most cases. |

|

|

|

The high-water mark for the ingress stream. This parameter is used to control backpressure. Please consult with 0MQ documentation for more details. |

|

|

|

The topic prefix specification for the ingress stream. The default value is |

|

|

|

The size of the whitelist cache used only when prefix-based filtering is in use. This parameter is used to quickly check if the source ID is in the whitelist or must be checked. |

|

|

|

The maximum number of inflight operations for the ingress stream. This parameter is used to allow the service to endure a high load. Default value is OK for most cases. |

|

|

|

If set to |

|

|

|

The configuration for the data egress in Savant ZMQ format. This parameter can be set to |

|

|

|

The URL for the data egress in Savant ZMQ format. |

|

|

|

The timeout for sending data to the egress stream. Default value is OK for most cases. |

|

|

|

The number of retries for sending data to the egress stream. Default value is OK for most cases. For unstable or busy recepients you may want to increase this value. |

|

|

|

The timeout for receiving data from the egress stream. Default value is OK for most cases. Valid only for |

|

|

|

The number of retries for receiving data from the egress stream (crucial for |

|

|

|

The high-water mark for the egress stream. This parameter is used to control backpressure. Please consult with 0MQ documentation for more details. |

|

|

|

The high-water mark for the egress stream. This parameter is used to control backpressure. Please consult with 0MQ documentation for more details. Change only if you are using |

|

|

|

The maximum number of inflight operations for the egress stream. This parameter is used to allow the service to endure a high load. Default value is OK for most cases. |

|

|

|

The path to the RocksDB storage. |

|

|

|

The time-to-live for data in the RocksDB storage. |

|

|

Environment Variables in Configuration File

You can use environment variables in the configuration file. The syntax is ${VAR_NAME:-default_value}. If the environment variable is not set, the default value will be used.

Deployment Best Practices

When you deploy Replay as a terminal node, the service bottlenecks are mostly related to the underlying storage. You can use any type of communication socket like sub, router, rep as long as your storage keeps up with the load. The default configuration is OK for most cases.

When you deploy Replay as an intermediate node, the service can experience bottlenecks related to the downstream nodes. Thus we recommend placing a buffer adapter between Replay and the next node, if the next node can experience performance drops. Such situations may require careful maintenance and configuration modification, so using a buffer adapter is a failsafe option.

This is also a “must go” option when the downstream node can reload or experience network unavailability.